XMPP Newsletter Banner

Welcome to the XMPP Newsletter, great to have you here again! This issue covers the month of February 2026.

The XMPP Newsletter is brought to you by the XSF Communication Team.

Just like any other product or project by the XSF, the Newsletter is the result of the voluntary work of its members and contributors. If you are happy with the services and software you may be using, please consider saying thanks or help these projects!

Interested in contributing to the XSF Communication Team? Read more at the bottom.

XSF Announcements

XMPP logo in Font Awesome

Just as we announced back in our Newsletter December 2025 issue, the official XMPP logo now comes bundled up in Font Awesome since version 7.2.0. And it looks Awesome!

XMPP Events

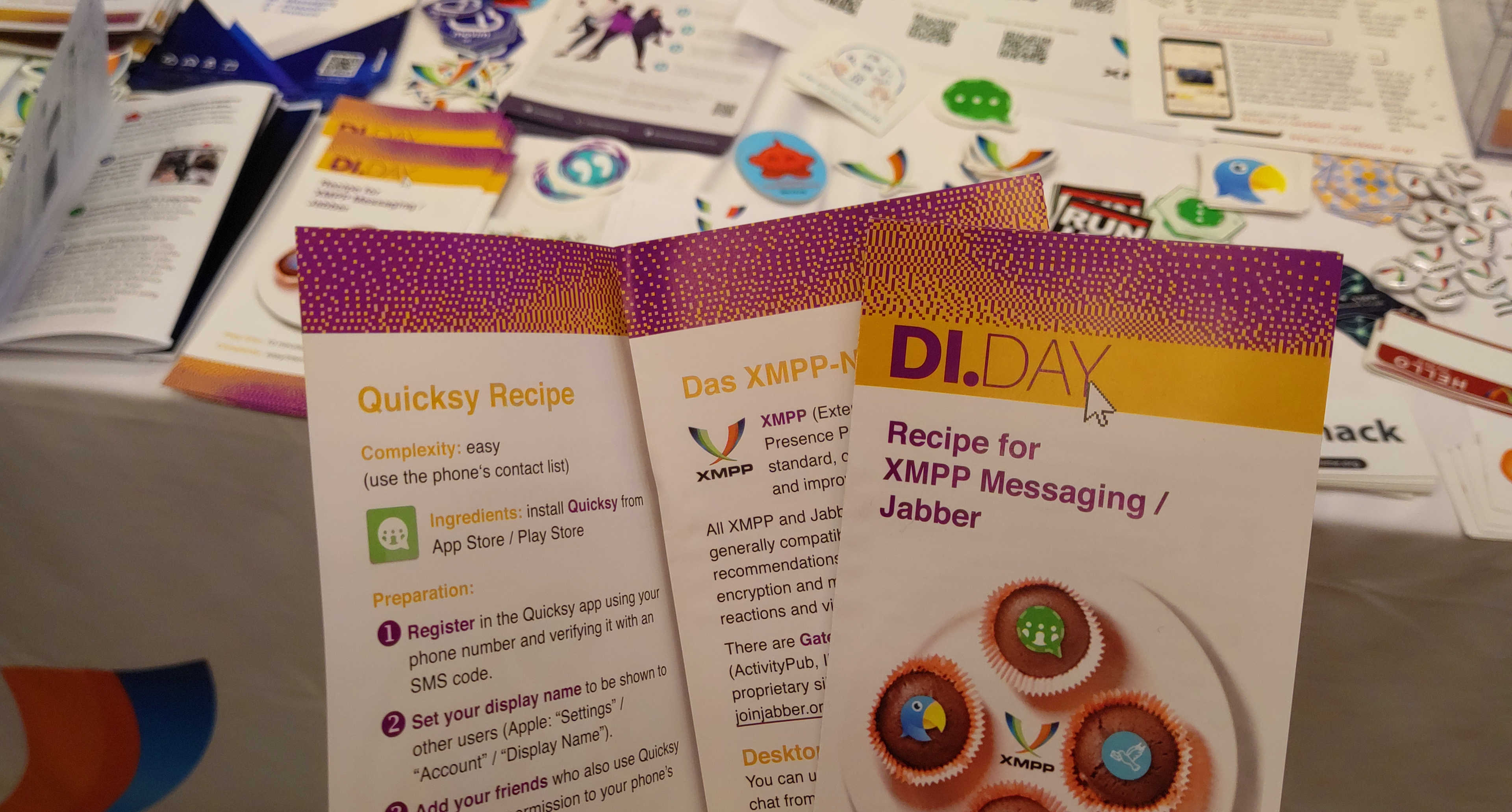

- XMPP material for DI.DAY: Four recipes to use an open chat protocol at the ‘Digital Independence Day’, the German (DI.DAY) initiative, from the XMPP community to enable people to start with independent messaging!

Four recipes for an open chat protocol at the Digital Independence Day!

- XMPP Sprint in Berlin (DE / EN): will take place from June Friday 19th to Sunday 21st 2026, at the Wikimedia Deutschland e.V. offices in Berlin, Germany. If this sounds like the right event for you, come and join us! Just make sure to list yourself here, so we know how many people will attend and we can plan accordingly. If you have any questions or concerns, join us at the chatroom: sprints@muc.xmpp.org!

Videos and Talks

XMPP Articles

- Upcoming changes to Let’s Encrypt and how they affect operators, by the The Prosody Team for Prosodical Thoughts.

- Apprise, Push Notifications that work with just about every platform, restored its XMPP support using Slixmpp, (after >4 years). ATM no stable release, but it’s coming …

- Bye bye Discord, hello Movim!, by Timothée Jaussoin for the Movim Blog.

- A (big) memory incoming memory optimization in Movim, by Timothée Jaussoin for the Movim Blog.

- Help Movim Reach Its Goals for 2026 by Timothée Jaussoin for the Movim Blog.

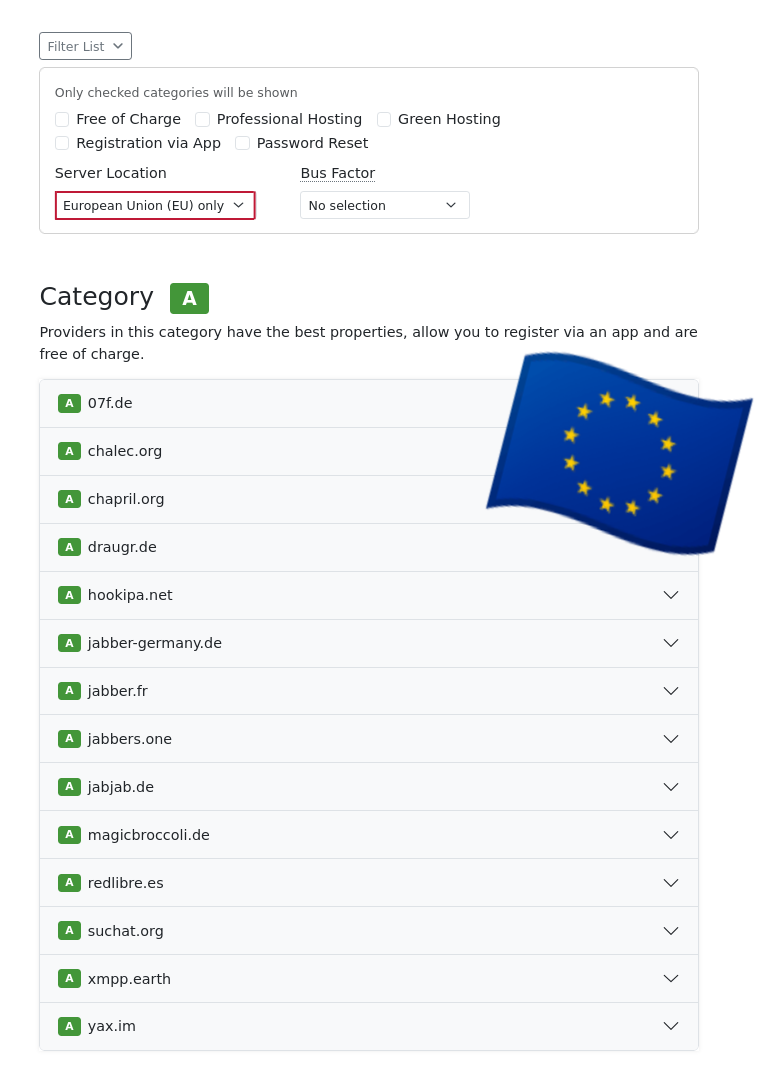

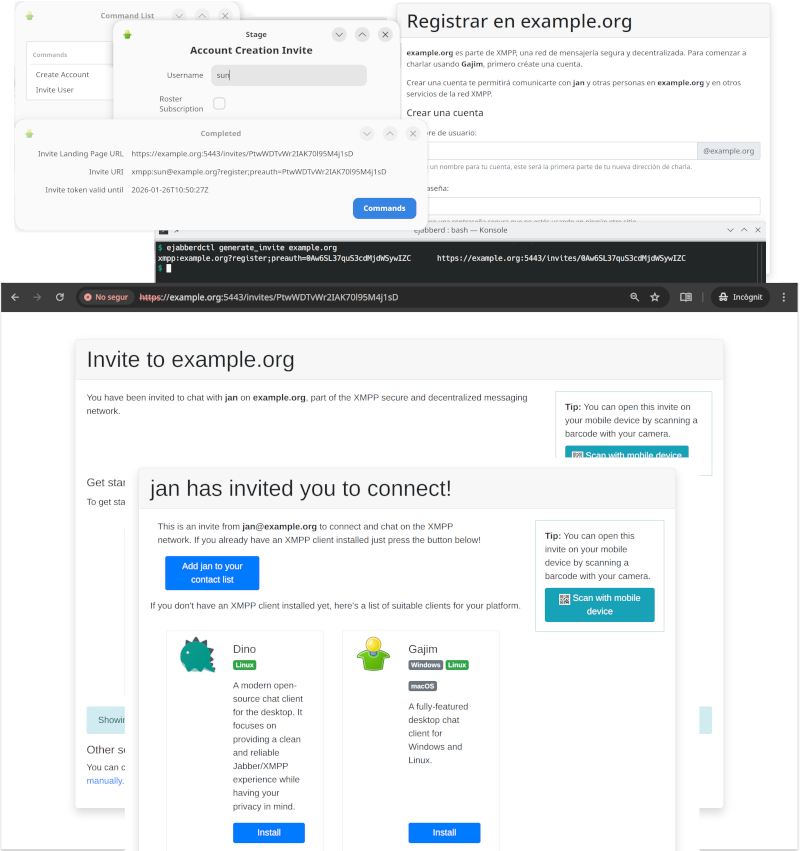

- Great Invitations, a tutorial that outlines how to create invites for the most popular clients according to XEP-0401 (Ad-hoc Account Invitation Generation), from the tutorials section at joinjabber.org.

- Visite nossa cozinha, by Isadora for the isacloud.im blog. [PT_BR]

- About Snikket and Prosody IM: relationship & goals in the XMPP ecosystem, by MattJ on Hacker News.

- Running My Own XMPP Server, by danny for the dmcc blog.

- MembeBot: ohhh I member: ¿Listo para implementar tu propio sistema de notificaciones de eventos en calendario sin depender de gigantes tecnológicos? by TheCoffeMaker for Cyberdelia. [ES]

- Introducing Wimsy: A cross-platform XMPP client built with Flutter.

XMPP Software News

XMPP Clients and Applications

- Conversations has released versions 2.19.10, 2.19.11 and 2.19.12 for Android. This version introduces a refactored QR code scanning and URI handling, a fix for rotation issues in tablet mode and also offers to delete messages when banning someone in a public channel among other things. You can take a look at the changelog for all the details.

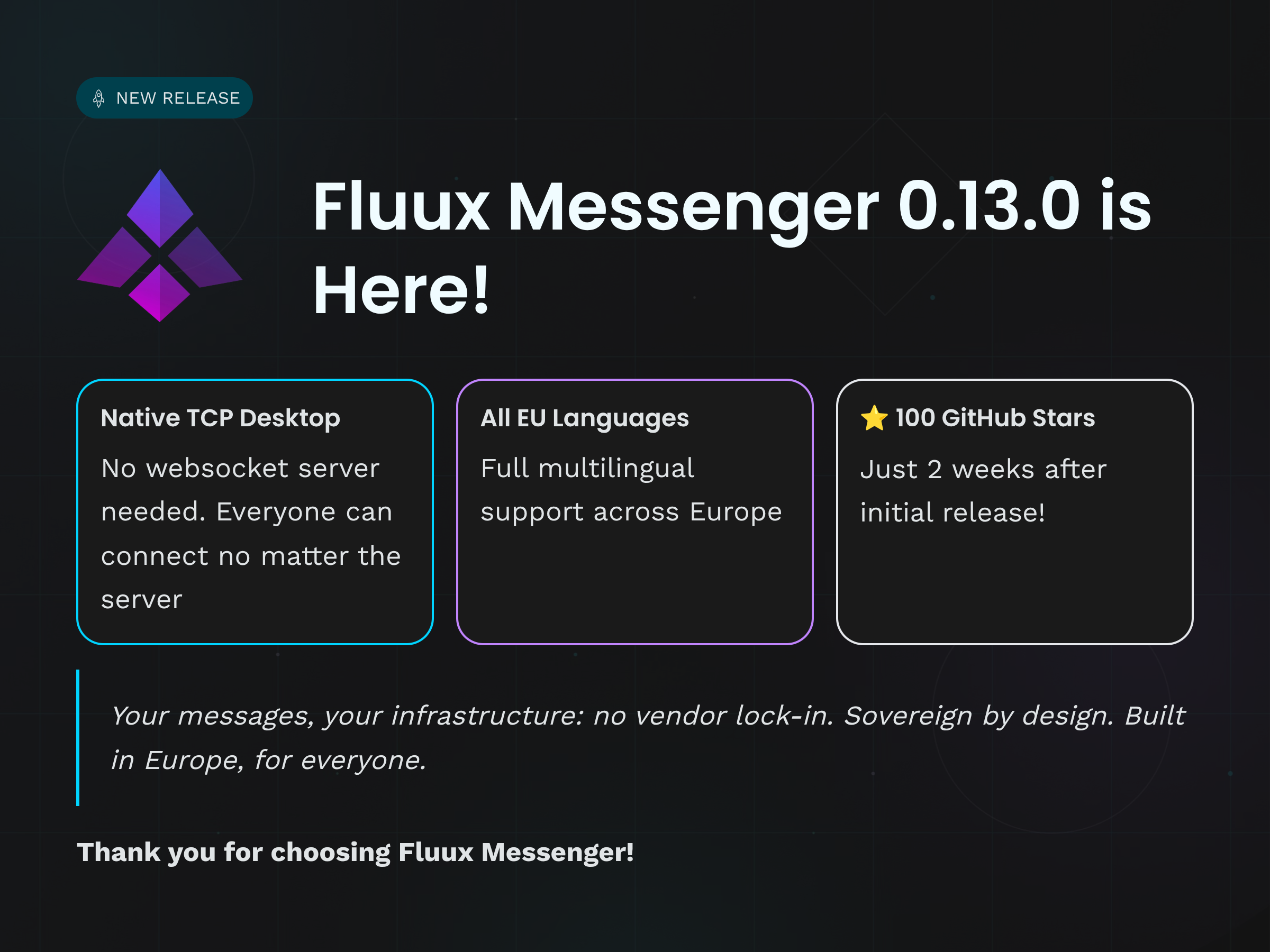

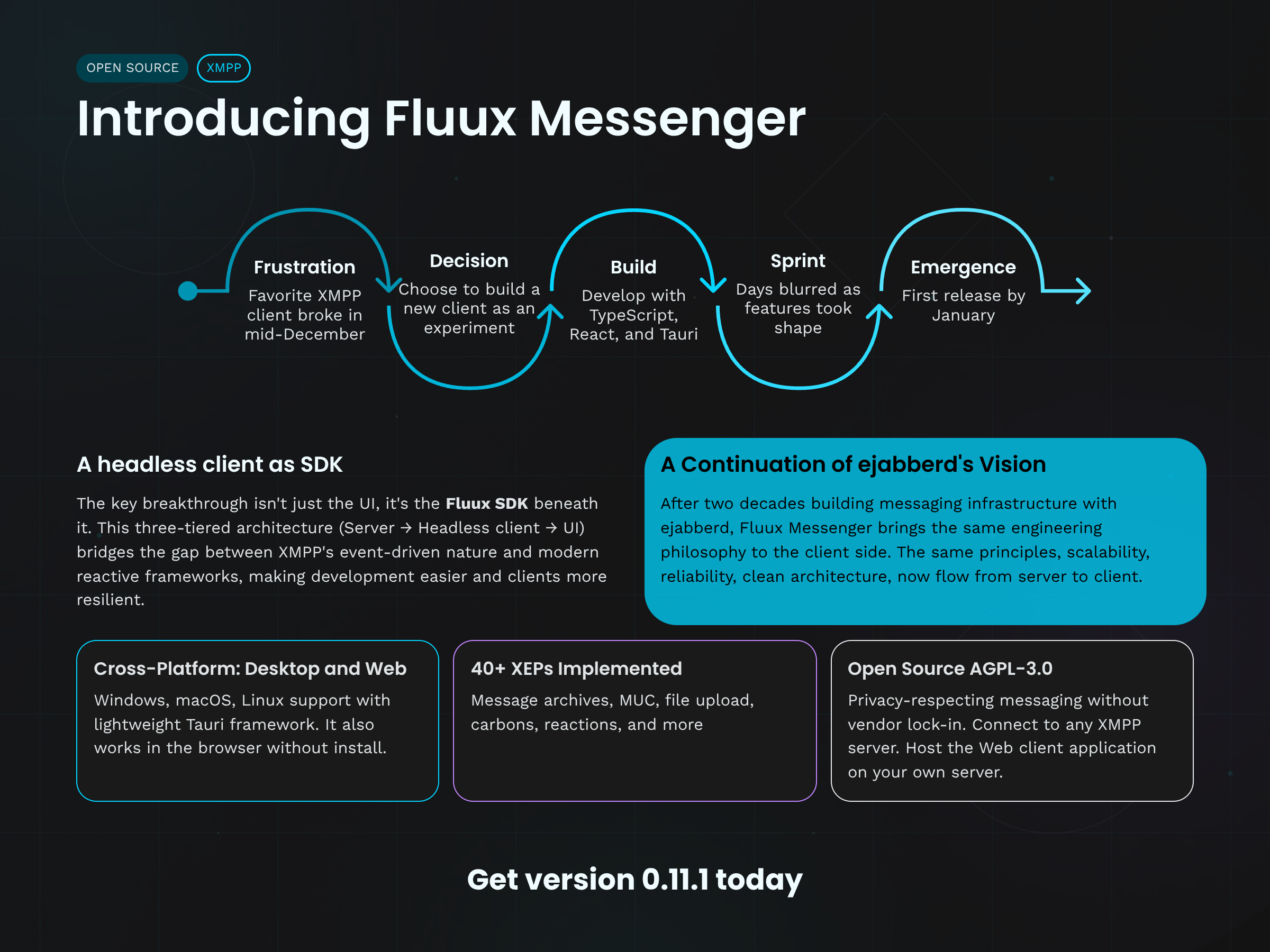

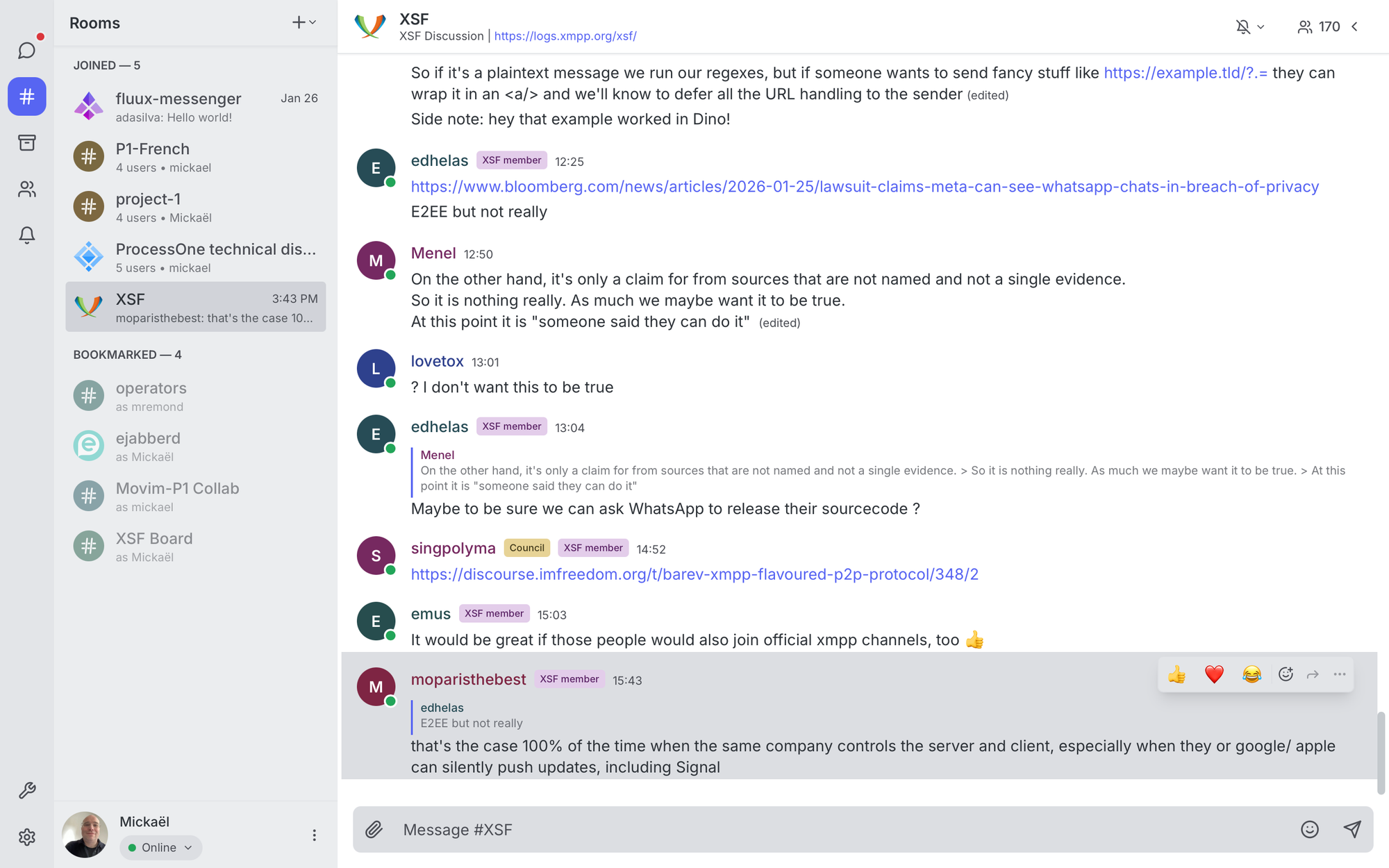

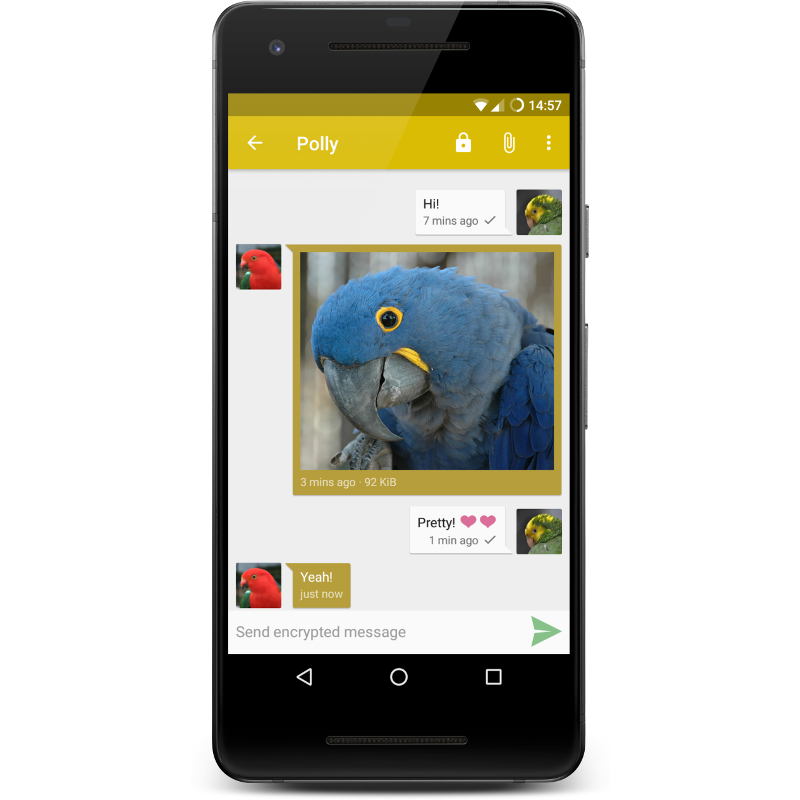

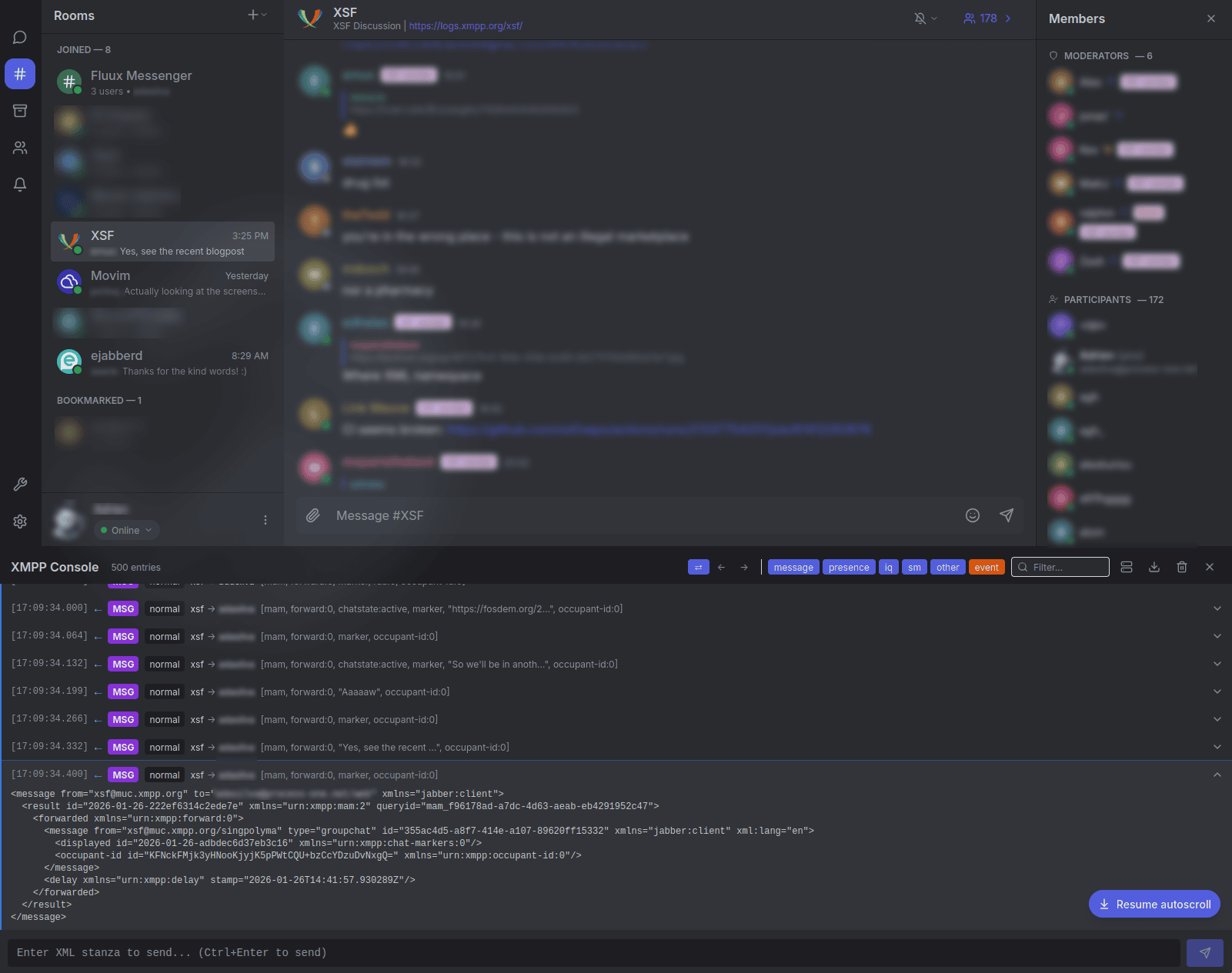

- Fluux Messenger, a modern, cross-platform XMPP client for communities and organizations, versions 0.11.3, 0.12.0, 0.12.1, 0.13.0, 0.13.1 and 0.13.2 have been released, with a list of additions, new features, improvements and bugfixes that is way longer than what we could ever mention in here! Go straight for the changelog for all the details!

Fluux Messenger main window and XMPP console

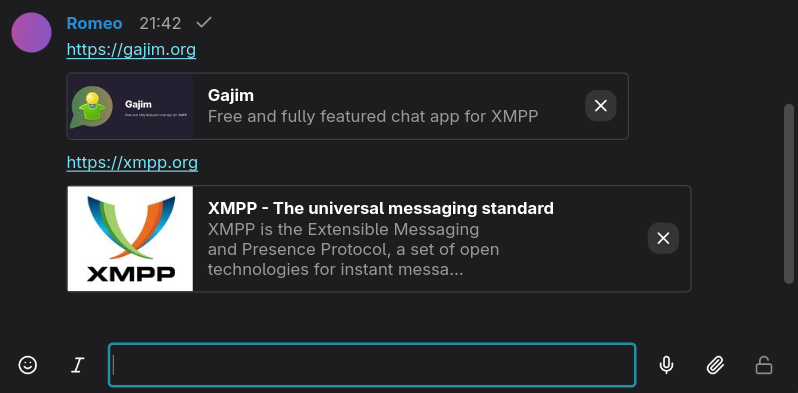

- Gajim has released version 2.4.4 of its free and fully featured chat app for XMPP. This release comes with link previews, many improvements for macOS, and bugfixes. Thank you for all your contributions! You can take a look at the changelog for all the details.

Link previews in Gajim 2.4.4

- Monal has released versions 6.4.18 for iOS and macOS.

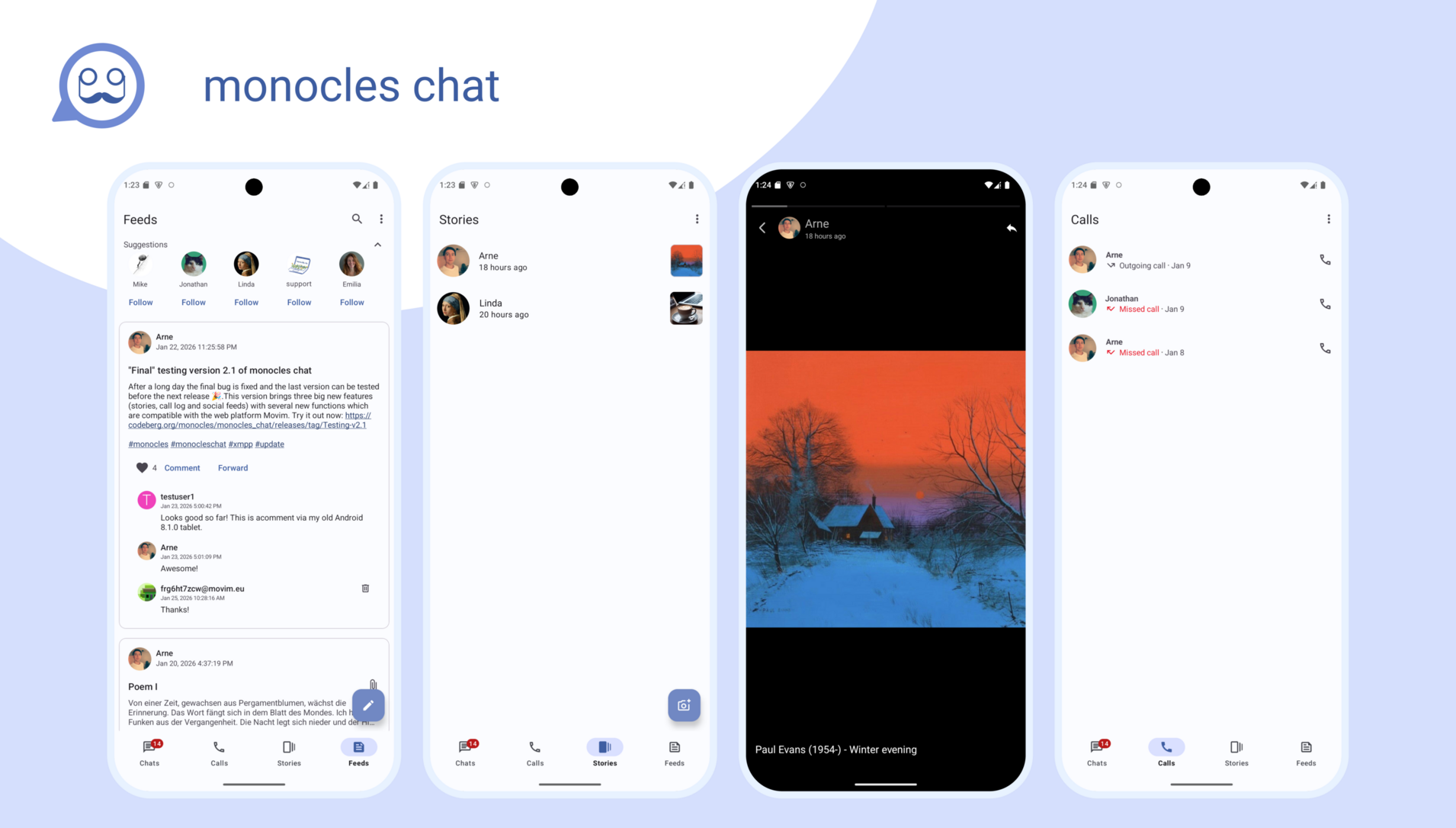

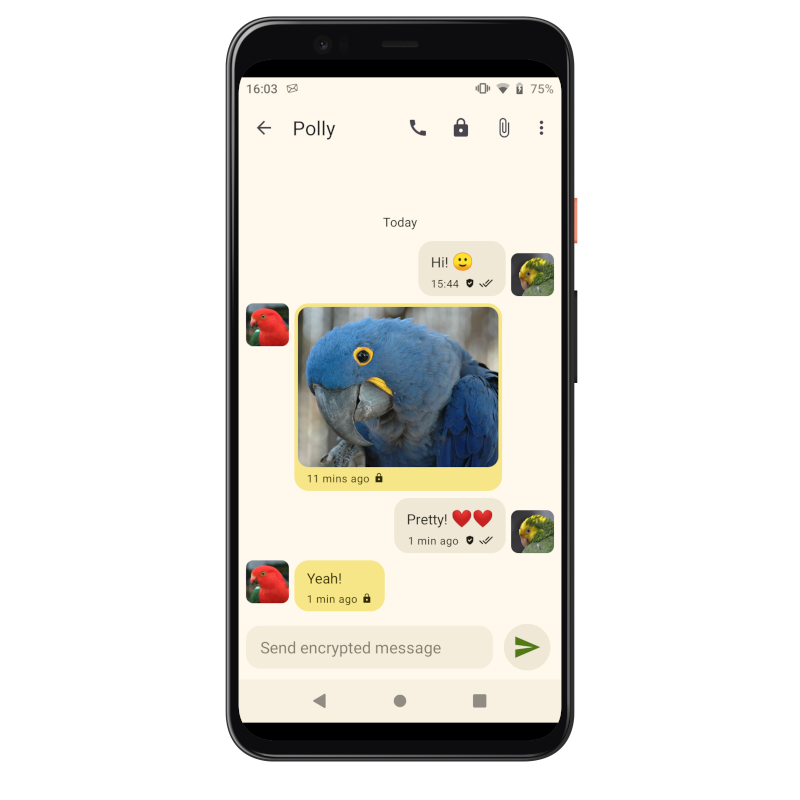

- Monocles has released versions 2.1.2 and 2.1.3 of its chat client for Android. The former release brings fixes for message retraction, images sent as link and infinite recursion in

TagEditorView, adds support for links in posts, disables publish button after click to prevent double posts, refactored message correction UI and a change in the social feed pubsub access model. The latest, adds pause and resume story on delete dialog, fixes for progress bar handling and contact lookup for stories and brings fixes from Conversations, plus updated translations. - Profanity has released version 0.16.0 of its console based XMPP client written in C. This release brings fixes for OTR detection, OMEMO startup, overwriting new accounts when running multiple instances, reconnect when no account has been set up yet, adds a new

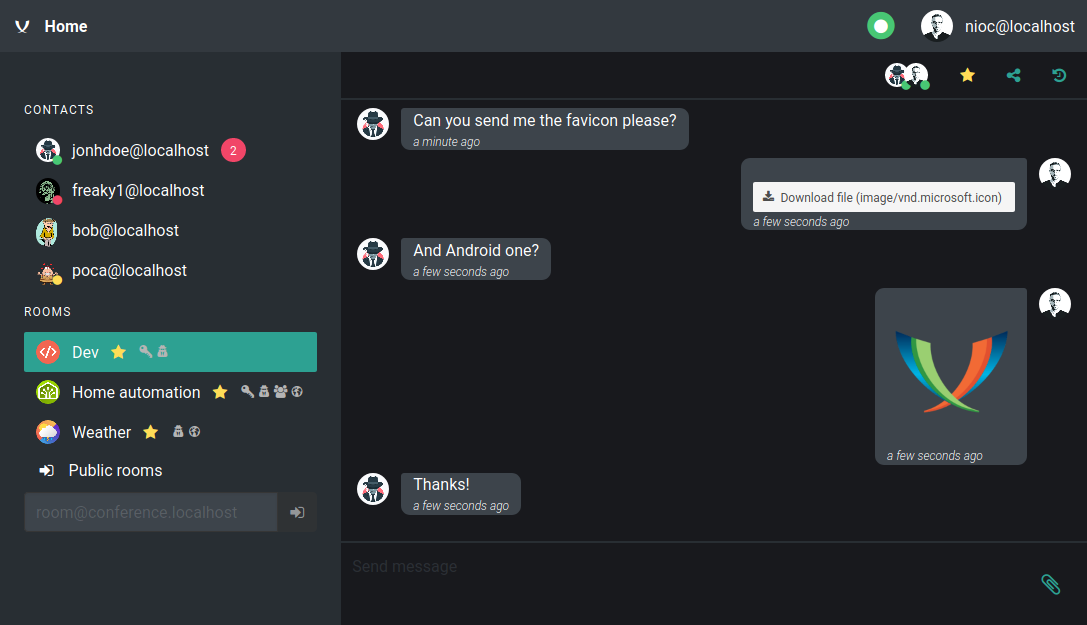

/changescommand that allows the user to compare the modifications of the runtime configuration and the saved configuration among many other fixes and improvements. Make sure to read the changelog for all the details! - xmpp-web has released version 0.11.0 of its lightweight web chat client for XMPP server.

XMPP-Web main window

XMPP Servers

- ProcessOne is pleased to announce the bugfix release of ejabberd 26.02. Make sure to read the changelog for all the details and a complete list of fixes and improvements on this release.

XMPP Libraries & Tools

- python-nbxmpp, a Python library that provides a way for Python applications to use the XMPP network, version 7.1.0 has been released. Full details on the changelog.

- QXmpp, the cross-platform C++ XMPP client and server library, versions 1.13.1, 1.14.1, 1.14.2 and 1.14.3 have been released. Full details on the changelog.

- Siltamesh, a simple bridge between Meshtastic and XMPP networks, version 0.2.0 has been released.

- Slidge versions 0.3.7 has been released. You can check the intermediate changelog from 0.3.6 to 0.3.7 for all the details.

- Slixmpp, the MIT licensed XMPP library for Python 3.7+ versions 1.13.0 and 1.13.2 have been released. You can read their respective official release announcements here and here for all the details.

- xmpppy, a Python library that is targeted to provide easy scripting with Jabber, version 0.7.3 has been released. Full details on the changelog.

Extensions and specifications

The XMPP Standards Foundation develops extensions to XMPP in its XEP series in addition to XMPP RFCs. Developers and other standards experts from around the world collaborate on these extensions, developing new specifications for emerging practices, and refining existing ways of doing things. Proposed by anybody, the particularly successful ones end up as Final or Active - depending on their type - while others are carefully archived as Deferred. This life cycle is described in XEP-0001, which contains the formal and canonical definitions for the types, states, and processes. Read more about the standards process. Communication around Standards and Extensions happens in the Standards Mailing List (online archive).

Proposed

The XEP development process starts by writing up an idea and submitting it to the XMPP Editor. Within two weeks, the Council decides whether to accept this proposal as an Experimental XEP.

- Link Metadata

- This specification describes how to attach metadata for links to a message.

New

- Version 0.1.0 of XEP-0510 (End-to-End Encrypted Contacts Metadata)

- Accepted as Experimental by council vote (dg)

- Version 0.1.0 of XEP-0511 (Link Metadata)

- Accepted as Experimental by council vote (dg)

Deferred

If an experimental XEP is not updated for more than twelve months, it will be moved off Experimental to Deferred. If there is another update, it will put the XEP back onto Experimental.

- No XEPs deferred this month.

Updated

- Version 1.26.0 of XEP-0001 (XMPP Extension Protocols)

- Surface (and correct) the source control information.

- Surface the publication URL (although I assume anyone reading this has figured that one out by now).

- Surface the contributor side of things.

- Add bit about XEP authors making PRs if they don’t exist - this is “new” rather than documenting existing practice.

- Add bit about PRs getting XEP author approval (existing practice hithertofore undocumented).

- Add bit about Council (etc) adding authors if they drop off (existing practice hithertofore undocumented).

- Add note to clarify that Retraction doesn’t mean Deletion (existing practice, documented, but has been misunderstood before). (dwd)

- Version 1.1.0 of XEP-0143 (Guidelines for Authors of XMPP Extension Protocols)

- Reflect preference for GitHub pull requests for initial submission,

- PRs to contain only one changed XEP. (dwd)

- Version 0.8.0 of XEP-0353 (Jingle Message Initiation)

- Adapt usage of JID types to real-world usage:

- Send JMI responses to full JID of initiator instead of bare JID

- Send JMI

<finish/>element to full JID of both parties (melvo)

- Adapt usage of JID types to real-world usage:

Last Call

Last calls are issued once everyone seems satisfied with the current XEP status. After the Council decides whether the XEP seems ready, the XMPP Editor issues a Last Call for comments. The feedback gathered during the Last Call can help improve the XEP before returning it to the Council for advancement to Stable.

- No XEPs last calls this month.

Stable

- No stable XEPs this month.

Deprecated

- No XEPs deprecated this month.

Rejected

- No XEPs rejected this month.

Spread the news

Please share the news on other networks:

- Mastodon

- Movim

- Bluesky

- YouTube

- Lemmy instance (unofficial)

- Reddit (unofficial)

- XMPP Facebook page (unofficial)

Subscribe to the monthly XMPP newsletter

SubscribeAlso check out our RSS Feed!

Looking for job offers or want to hire a professional consultant for your XMPP project? Visit our XMPP job board.

Newsletter Contributors & Translations

This is a community effort, and we would like to thank translators for their contributions. Volunteers and more languages are welcome! Translations of the XMPP Newsletter will be released here (with some delay):

-

Contributors:

- To this issue: emus, cal0pteryx, Gonzalo Raúl Nemmi, Ludovic Bocquet, sokai, XSF iTeam

-

Translations:

- French: Adrien Bourmault (neox), alkino, anubis, Arkem, Benoît Sibaud, mathieui, nyco, Pierre Jarillon, Ppjet6, Ysabeau

- Italian: Mario Sabatino, Roberto Resoli

- Portuguese: Paulo

Help us to build the newsletter

This XMPP Newsletter is produced collaboratively by the XMPP community. Each month’s newsletter issue is drafted in this simple pad. At the end of each month, the pad’s content is merged into the XSF GitHub repository. We are always happy to welcome contributors. Do not hesitate to join the discussion in our Comm-Team group chat (MUC) and thereby help us sustain this as a community effort. You have a project and want to spread the news? Please consider sharing your news or events here, and promote it to a large audience.

Tasks we do on a regular basis:

- gathering news in the XMPP universe

- short summaries of news and events

- summary of the monthly communication on extensions (XEPs)

- review of the newsletter draft

- preparation of media images

- translations

- communication via media accounts

Unsubscribe from the XMPP Newsletter

For this newsletter either log in here and unsubscribe or simply send an email to newsletter-leave@xmpp.org. (If you have not previously logged in, you may need to set up an account with the appropriate email address.)

License

This newsletter is published under CC BY-SA license.