Remote Patient Monitoring (RPM) is changing how care is delivered. By tracking health data through connected devices outside traditional settings, it helps clinicians act sooner, reduce readmissions, and focus resources where they’re most needed. With rising NHS pressures and growing demand for digital care, RPM is becoming central to how both public and private providers support long-term conditions, recovery, and hospital-at-home models. This guide explores how RPM works, where it’s gaining ground, and why healthcare leaders are paying attention.

What is Remote Patient Monitoring?

RPM refers to systems that collect patient data remotely using at-home or mobile devices, which clinicians then review. These systems can work in real time or at scheduled intervals and are often integrated with a patient’s electronic medical record (eMR) or practice management system (PAS). The goal is to monitor patients without needing in-person visits, while still keeping clinical oversight.

Devices Commonly Used in RPM

The success of any RPM programme depends on the devices that power it. These tools collect, track, and transmit key health data- either in real time or at regular intervals. Whether issued by clinicians or connected through a patient’s tech, they underpin the delivery of safe, responsive remote care.

These devices support the management of a wide range of conditions, including diabetes, heart disease, COPD, asthma, sleep disorders, high-risk pregnancies, and post-operative recovery.

| Device Type | Primary Function |

| Blood pressure monitors | Measure systolic/diastolic pressure for hypertension monitoring |

| Glucometers | Track blood glucose levels for diabetes management |

| Pulse oximeters | Monitor oxygen saturation (SpO2) and heart rate |

| ECG monitors | Detect heart rhythm abnormalities such as arrhythmias |

| Smart inhalers | Track usage and technique for asthma or COPD |

| Wearable sensors | Monitor movement, sleep, temperature and heart rate |

| Smart scales | Measure weight trends, often linked to fluid retention or post-op care |

| Sleep apnoea monitors | Detect interrupted breathing patterns during sleep |

| Maternity tracking devices | Monitor fetal heart rate, maternal blood pressure, or contractions |

These tools can either be prescribed by clinicians or integrated with consumer health tech like smartphones or smartwatches.

For example, a cardiologist may use a mobile ECG app paired with a sensor to track arrhythmias from home.

Safety and Regulation

The boundary between wellness wearables and clinical devices is still being defined. While some tools simply gather data, others have therapeutic applications, such as managing pain or respiratory issues. This matters for compliance. Devices that influence treatment decisions must meet higher regulatory standards, particularly around safety, accuracy, and data security. Developers and suppliers need to stay aligned with MHRA or equivalent guidance to avoid risk to both patients and business continuity.

How Remote Patient Monitoring Works

RPM follows a structured process:

- Data collection from connected medical devices

- Secure transmission to a clinical platform

- Integration with existing systems

- Analysis and alerting via algorithms or clinician review

- Intervention where thresholds are breached

- Feedback to patients through apps or direct communication

RPM Adoption is Accelerating

Globally, the uptake of RPM is increasing. In the US, patient usage rose from 23 million in 2020 to 30 million in 2024 and is forecast to reach over 70 million by the end of 2025 (HealthArc). The NHS is also scaling digital pathways. Over 100,000 patients have been supported by virtual wards in England, with NHS England increasing capacity to 50,000 patients per month by winter 2024. RPM is central to this shift.

Core Technologies in RPM

These technologies work behind the scenes to capture, transfer, and make sense of patient data, so that clinicians have timely, accurate insights to act on.

Wearables and sensors

Track vital signs like heart rate, oxygen levels, and movement patterns.

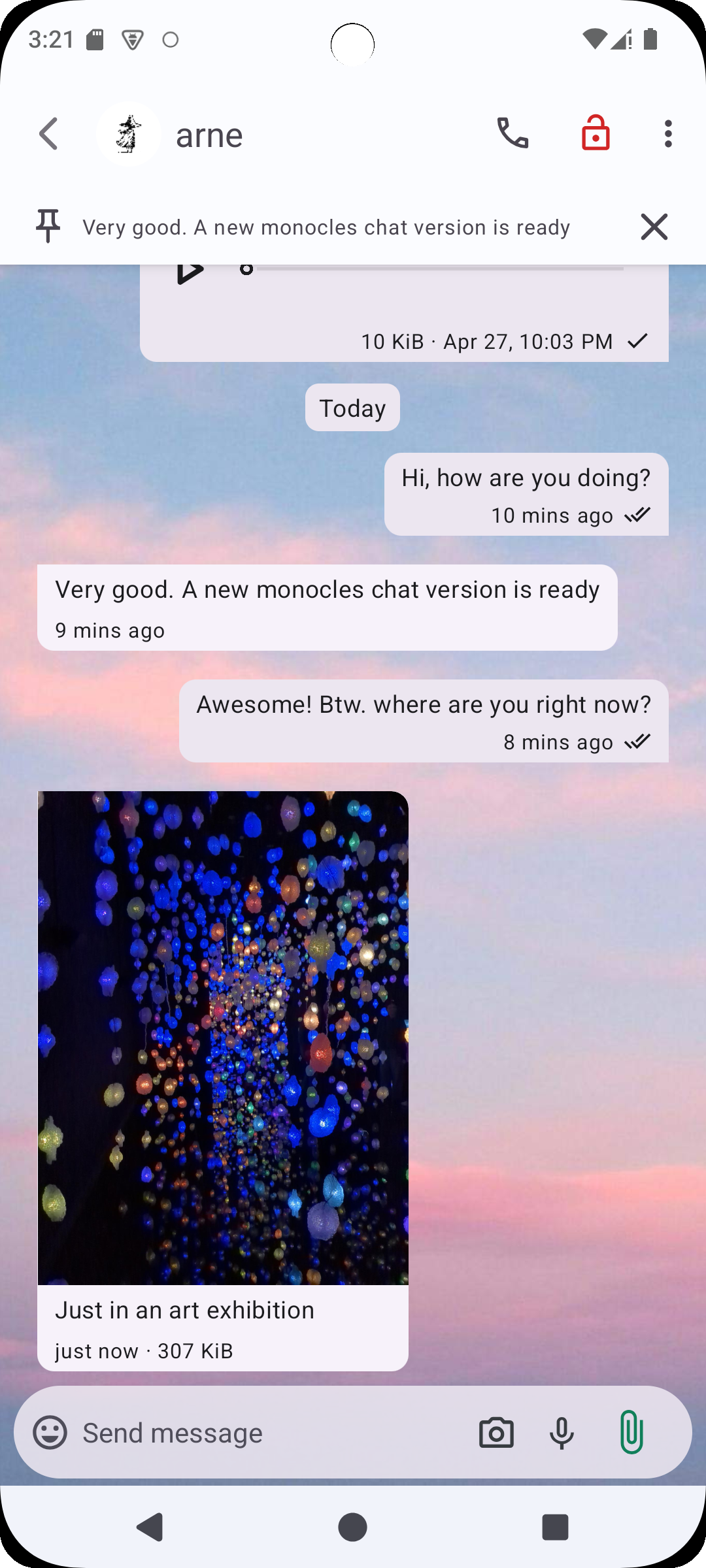

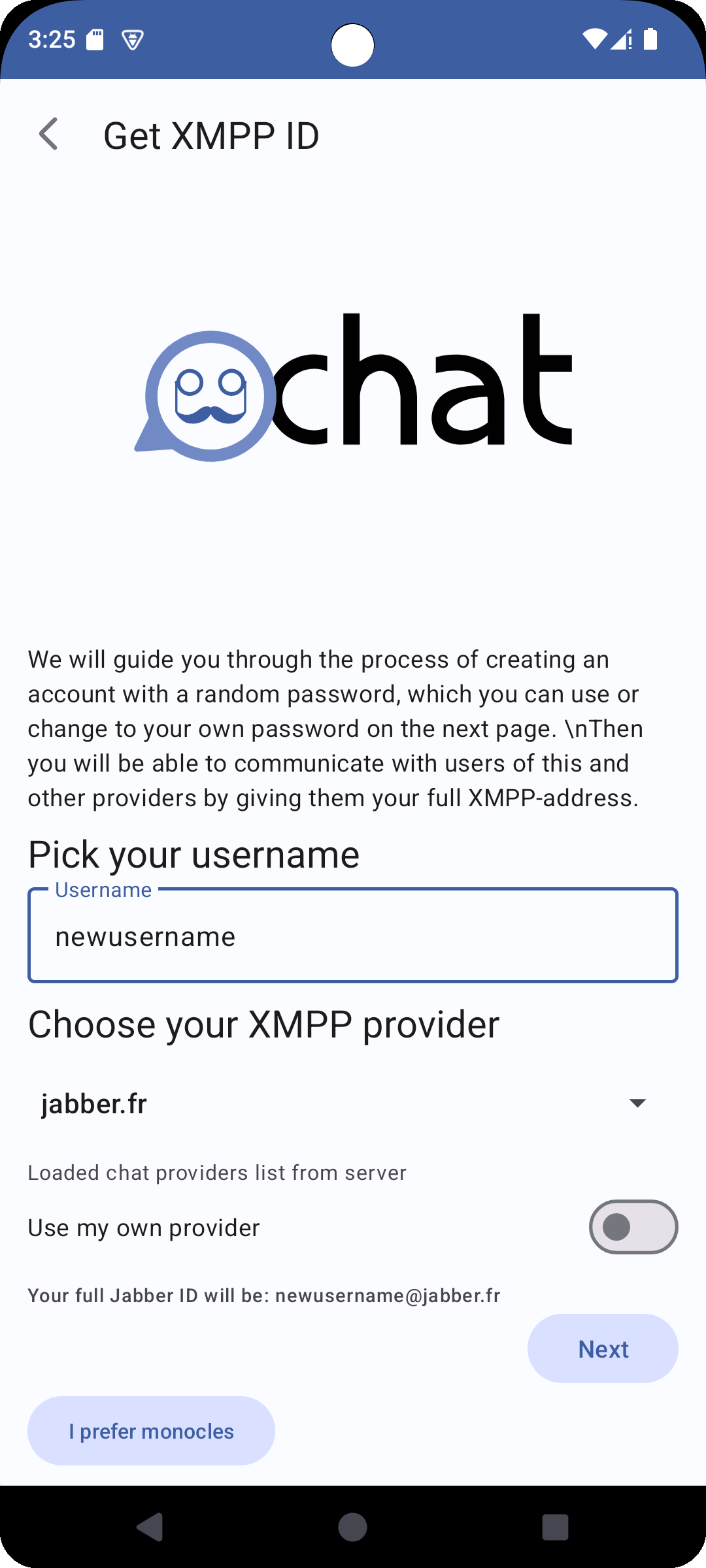

Mobile health apps

Used by patients to report symptoms, manage medications, and receive support.

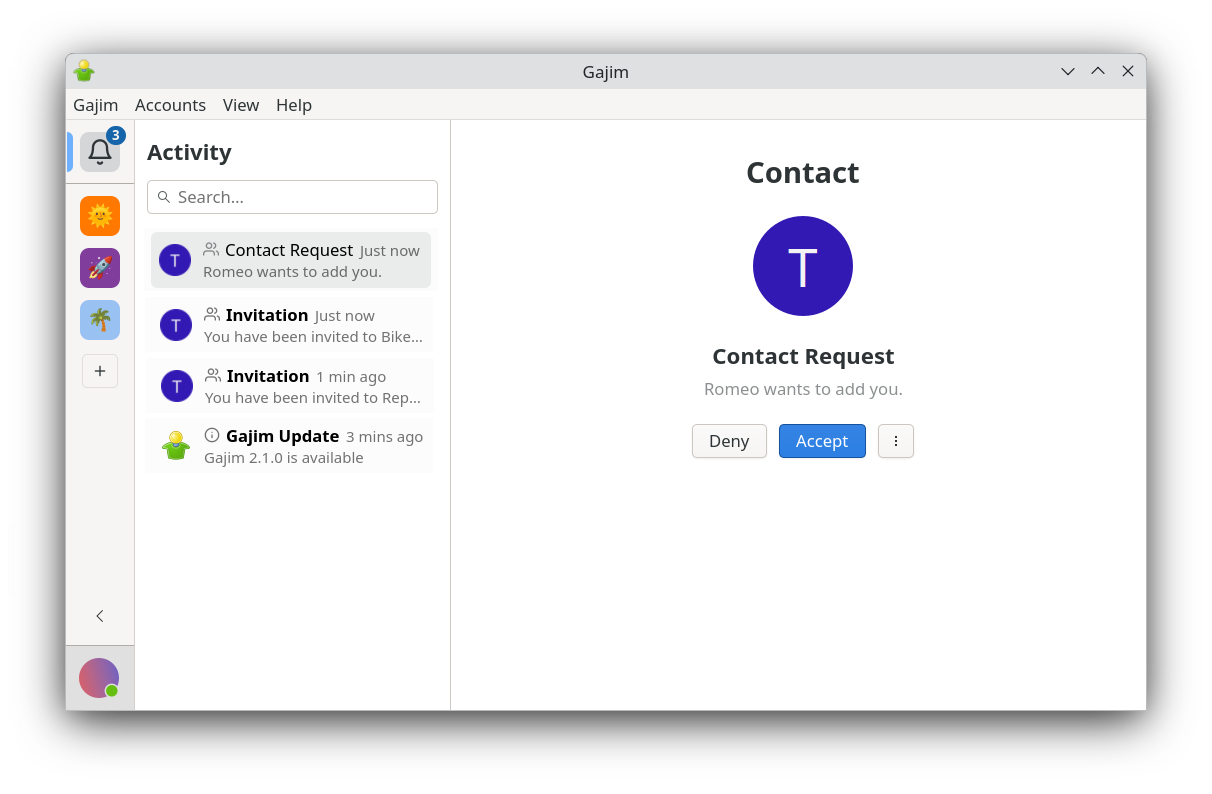

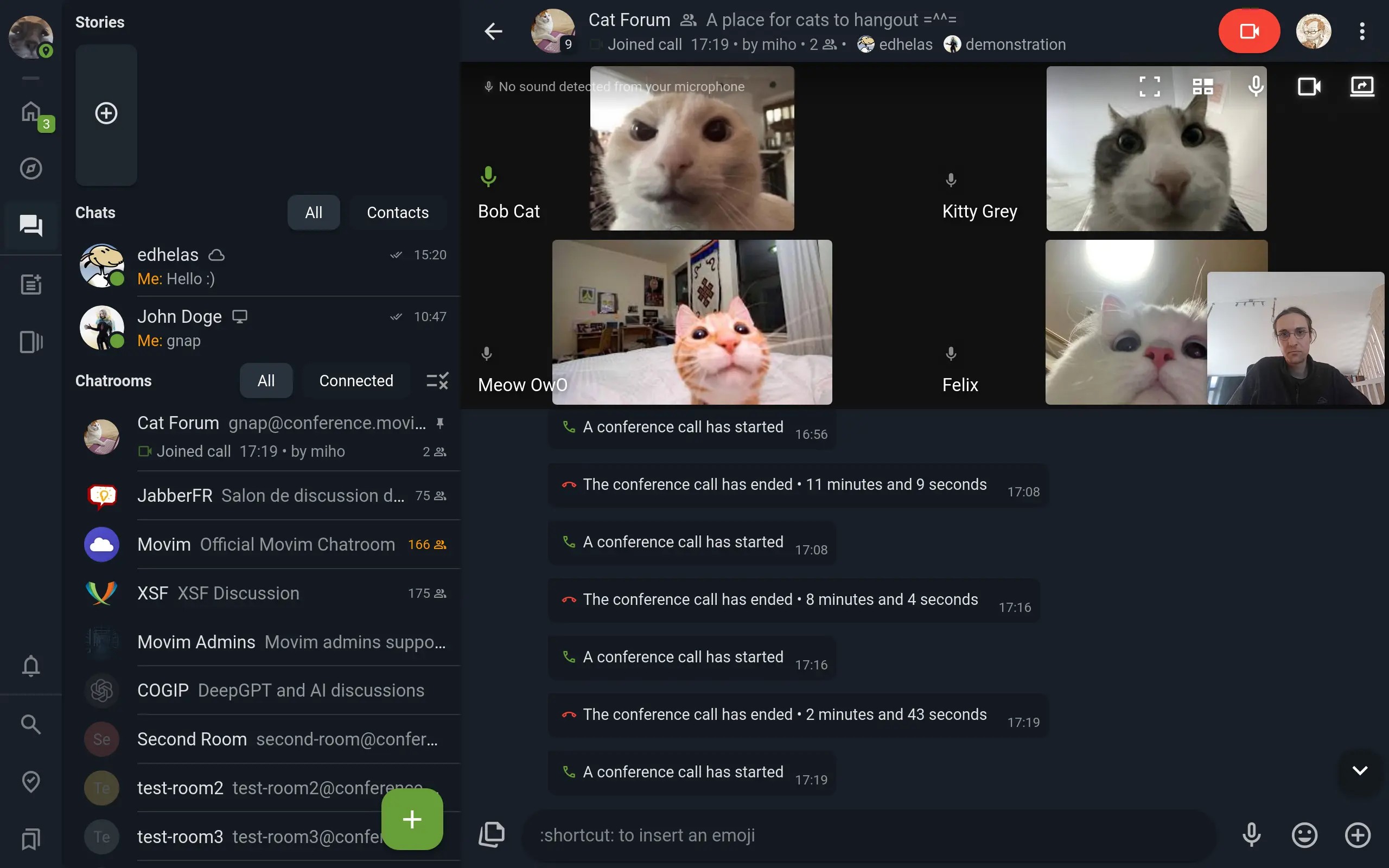

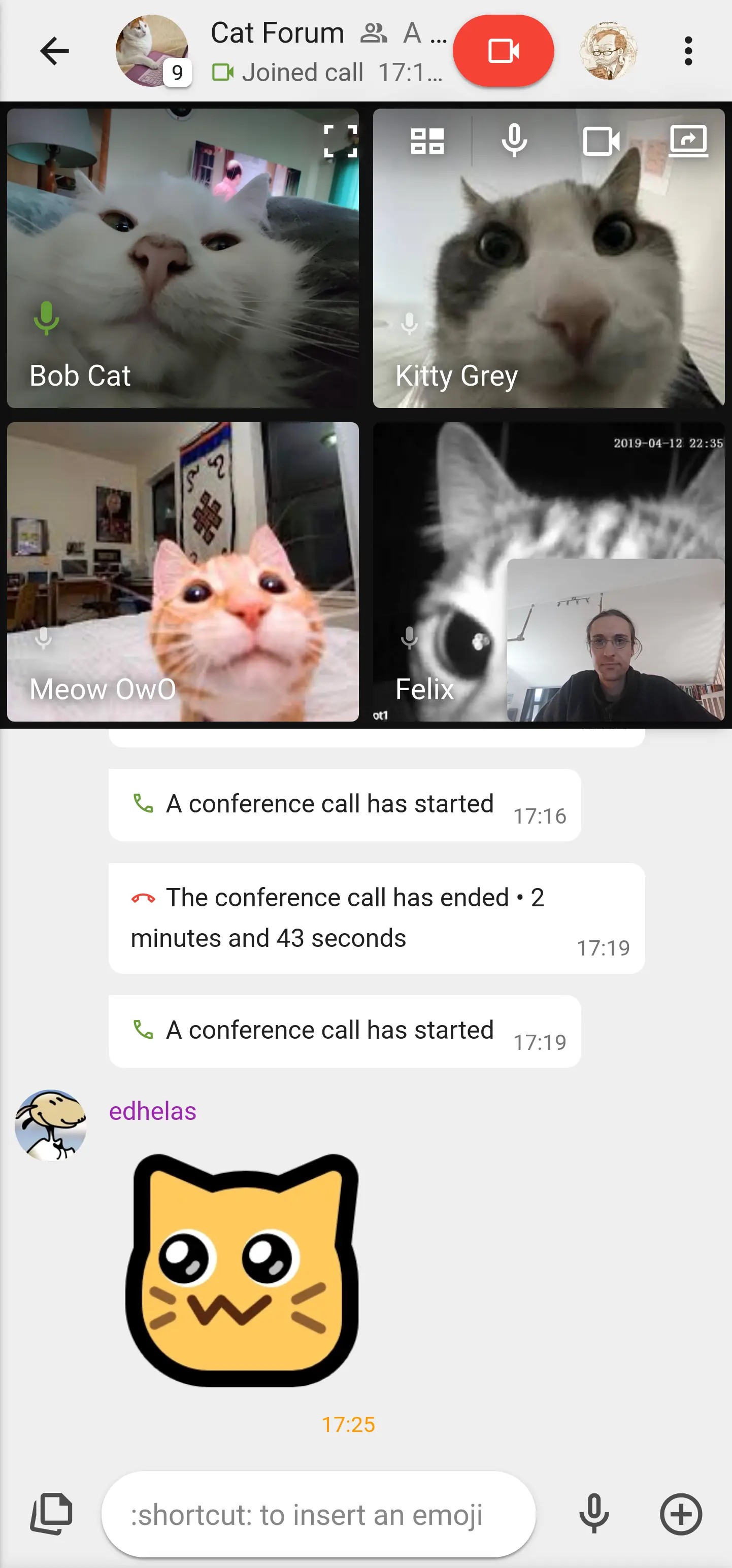

Telemedicine platforms

Enable direct communication between patients and clinicians through chat, phone, or video.

Analytics engines

Help identify risk trends or changes in condition using automated flagging systems.

Why RPM Matters for Healthcare Leaders

The NHS is under sustained pressure. According to the NHS Confederation, over 7.6 million people are currently on elective care waiting lists, while ambulance delays and A&E overcrowding persist. RPM supports care outside the hospital by freeing up beds, reducing readmissions, and improving patient flow. At a system level, RPM:

- Cuts avoidable admissions

- Shortens hospital stays

- Reduces time-to-intervention

- Frees up staff capacity

- Lowers infection risk

Cost savings are also significant. Some estimates suggest RPM can reduce total healthcare expenditure by 20–40%, particularly for chronic conditions.

RPM in Action: Key Use Cases

The real impact of RPM is seen in the way it supports different stages of the care journey. Here are some of the most common and most effective use cases.

Chronic Disease Management

RPM allows patients with diabetes, COPD, or hypertension to track metrics like blood pressure, oxygen levels or glucose and share results with care teams. Interventions can be made earlier, reducing the chance of deterioration or escalation.

Mental Health Monitoring

Wearables can capture signs of stress or low mood by tracking heart rate variability, sleep patterns, and daily activity. RPM helps clinicians spot early signs of relapse in conditions like anxiety and depression, particularly when patients are less likely to reach out themselves.

Post-Operative Recovery

Patients recovering from surgery can be monitored for wound healing, temperature spikes, or pain trends. A 2023 BMC Health Services Research study showed RPM helped reduce six-month mortality rates in patients discharged after heart failure or COPD treatment.

Elderly Care

For older adults, RPM supports safety without constant in-person contact. Devices with fall detection, medication reminders, and routine tracking can help carers respond quickly to changes, reducing emergency visits and supporting independent living.

Clinical Trials

RPM speeds up trials by reducing the need for travel, offering more continuous data, and improving patient adherence.

Pandemic and Emergency Response

During COVID-19, RPM enabled safe monitoring of symptoms like oxygen saturation or fever, supporting triage and resource allocation when systems were overwhelmed.

Benefits Across the System

RPM not only benefit patients, but it also improves outcomes and operations across every part of the health and care system. Here’s how you can gain from its use.

| Key Benefits | |

| Patients | Greater independence, faster recovery, fewer hospital visits |

| Clinicians | Real-time data visibility, increased capacity, and better focus on complex cases |

| Carers | Peace of mind, early alerts, and less reliance on manual checks |

| ICBs & Providers | Lower readmissions, improved resource use, and more coordinated care |

Where Tech Comes In

Behind every reliable RPM system is a reliable tech stack. In high-risk, high-volume environments like healthcare, platforms need to be built for stability, security and scalability.

That’s why some platforms use programming languages such as Erlang and Elixir, trusted across the healthcare sector for their ability to manage high volumes and maintain uptime. These technologies are being adopted in healthcare systems that prioritise performance, security, and scalability.

When built correctly, RPM infrastructure allows providers to:

- Maintain continuous monitoring across patient groups

- Respond quickly to emerging clinical risks

- Scale services confidently as demand increases

- Minimise risk from tech failure or data breach

To conclude

Patients recover better when they’re in a familiar place, supported by the right tools and professionals. Hospitals function best when their time and space are reserved for those who truly need them. Remote Patient Monitoring is not just a digital upgrade. It’s a strategic shift, towards smarter, more responsive care.

Ready to explore how RPM could support your digital care strategy? Get in touch.

The post What is Remote Patient Monitoring? appeared first on Erlang Solutions.