We are living in an economy that rarely sleeps. Payments clear late at night. Payroll runs in the background. Businesses expect every digital touchpoint to work when they need it.

Most people do not think about this shift. They assume the systems behind it will hold.

That assumption carries weight.

Fintechs, including small and mid-sized ones, now sit inside the basic infrastructure of how money moves. Their uptime affects cash flow. Their stability affects trust. When something breaks, real businesses feel it immediately.

Expectations changed faster than most systems did.This follows on from our earlier piece, From Prototype to Production, which explored how early technical shortcuts surface as systems scale. Here, we look at what happens next, when those systems become part of the infrastructure businesses rely on every day.

When “tech downtime” becomes infrastructure failure

Outages no longer feel contained. A single failure can affect services that millions of people and businesses depend on.

Large providers experience this as much as small ones. When a shared platform falters, the impact spreads quickly. More than 60 percent of outages now come from third-party providers rather than internal systems, highlighting how tightly connected the ecosystem has become.

The true cost is trust

For fintechs, the impact is immediate because money is involved.

A payment delay blocks cash flow. A failed identity check stops onboarding. A stalled platform damages credibility.

For an SME, this can play out over the course of a single day. Payroll does not process in the morning. Supplier payments stall in the afternoon. Customer support queues fill up while teams wait for systems to recover. Even short interruptions create knock-on effects that last far longer than the outage itself.

And the numbers reflect that risk:

- £25,000 is the average cost of downtime for SMEs.

- 40% of customers consider switching providers after a single outage.

- Fintech revenue losses account for approximately US$37 million of downtime-related costs each year.

At this level of dependency, downtime stops being a technical issue. It becomes an infrastructure failure.

Fintechs have become infrastructure whether they intended to or not

Fintech services have moved beyond convenience. They now underpin everyday economic activity for businesses that depend on constant access to money, credit, and financial data.

This shift shows up in uptime expectations. Platforms that handle financial activity are measured against standards once reserved for mission-critical systems. Even brief disruption can have outsized consequences when services are expected to remain available throughout the day..

Customers do not adjust expectations based on company size or stage. If money flows through a service, users expect it to be available around the clock. When it is not, the failure feels systemic rather than technical. What breaks is not just functionality, but confidence.

That is the environment fintech leaders are operating in now.

Where resilience typically breaks down

Most fintech systems do not fail because the idea was weak. They fail because early decisions prioritised speed over durability.

Teams optimise for launch. They prove demand. They ship quickly. That works early on, but systems designed for experimentation often struggle once demand becomes constant rather than occasional.

Shortcuts that felt harmless early on start to surface under pressure. Shared components become bottlenecks. Manual processes turn into operational risk. Integrations that worked at low volume become fragile at scale. Recent analysis shows average API uptime has fallen year over year, adding more than 18 hours of downtime annually for systems dependent on third-party APIs.

Common pressure points include:

- Shared components that act as single points of failure

- Manual operational work that cannot keep up with growth

- Third-party dependencies with limited visibility or control

- Architecture built for bursts of usage instead of continuous demand

These are not accidental outcomes. They are the result of trade-offs made under pressure. Funding milestones, launch timelines, and growth targets shape architecture as much as technical skill does.

When outages happen, they rarely trace back to a single bug. They trace back to earlier choices about what mattered and what could wait.

From product thinking to infrastructure grade systems

Product thinking is about features and speed. Infrastructure thinking is about continuity.

Infrastructure-grade systems assume failure will happen. They are built to contain it, recover quickly, and keep the wider platform running. The goal is not perfection. The goal is staying available.

The goal is not perfection. The goal is staying available.

Continuous availability is now expected in financial services. Systems are updated and maintained without noticeable downtime because users do not tolerate interruptions when money is involved.

This approach does not slow teams down. It reduces risk. Deployments feel routine instead of stressful. Engineering effort shifts away from incident response and toward steady improvement.

Over time, this changes how organisations operate. Teams plan differently. Roadmaps become more realistic. Reliability becomes part of delivery rather than a separate concern.

Elixir and the always-on economy

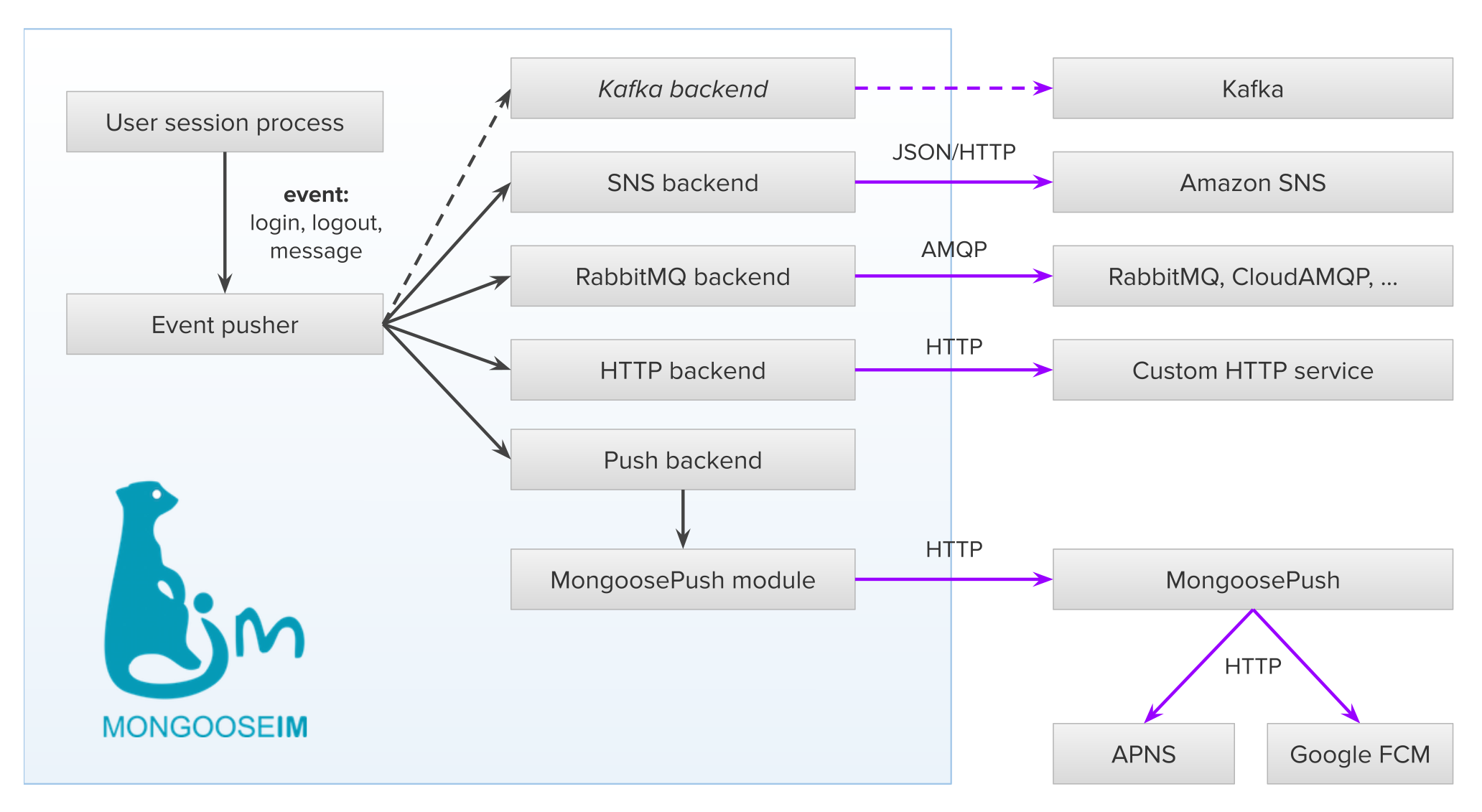

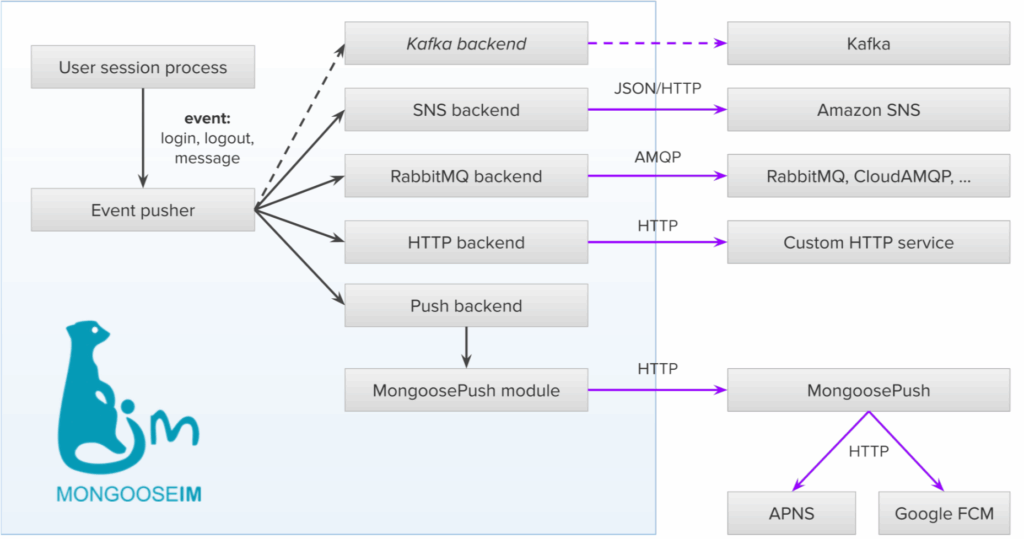

Elixir programming language is designed for systems that are expected to stay available. It runs on the BEAM virtual machine, which was built in environments where downtime carried real consequences.

That background shows up in how Elixir applications are structured. Systems are composed of many small, isolated processes rather than large, tightly coupled components. When something fails, it fails locally. Recovery is expected. The wider system continues to operate.

Elixir in Fintech

This matters in fintech, where failure is inevitable and interruptions are costly. External services misbehave. Load changes without warning. Elixir applications are built to absorb those conditions and recover quickly without cascading outages.

Teams working in Elixir tend to spend less time managing fragile behaviour and more time improving core functionality. Systems evolve instead of being replaced. Reliability becomes part of the foundation rather than a promise teams struggle to maintain.

For fintechs operating in an always-on economy, that approach aligns with the expectations already placed on them.

Reliability as a competitive advantage

Reliable systems can completely change how a fintech operates day to day.

For growing fintechs, uptime supports trust and regulatory confidence. Customers stay because the platform behaves predictably. Growth becomes steadier because teams are not constantly reacting to incidents. Downtime costs make this real, especially for small businesses that lose revenue when systems are unavailable.

For larger providers, reliability reduces operational strain. Fewer incidents mean fewer emergency fixes and fewer difficult conversations with partners and regulators. Teams spend more time improving core services and less time managing fallout.

Reliability also shapes perception. Platforms that stay up become easier to trust with deeper integrations and higher volumes. Over time, that trust compounds and turns stability into a real advantage, even if it is rarely visible from the outside.

To conclude

The always-on economy creates real opportunity for fintechs, but it also raises expectations that many platforms were not originally built to meet.

The question is whether your system has the resilience to operate as infrastructure day after day. If you are a fintech and want to build with reliability in mind, get in touch.

Designing for resilience early makes it far easier to scale without introducing fragility later on.

The post The Always-On Economy: Fintech as Critical Infrastructure appeared first on Erlang Solutions.

(credit:

(credit: